Beyond LLMs: Designing Multi‑Agent AI Systems for Enterprise Intelligence

Executive Summary

Multi-agent artificial intelligence (AI) systems represent a paradigm shift beyond monolithic large language models (LLMs). Rather than relying on a single model to handle every task, multi-agent architectures orchestrate a constellation of specialized agents—planning agents, retrieval agents, generation agents and evaluators—under a central controller. These agents can access data sources, call external services and coordinate complex workflows, enabling enterprise applications that are more robust, context-aware and efficient than anything a single model can deliver. The agentic AI market is expanding rapidly: research indicates that U.S. agentic AI revenues could grow from $2.4 billion in 2025 to $46.5 billion by 2034, with 29 percent of organizations already using agents and another 44 percent planning to adopt them. Adoption rates in healthcare, retail and manufacturing are approaching or exceeding 70 percent. Early adopters report 30 percent reductions in development overhead and 50–75 percent time savings on common tasks. This article offers a comprehensive guide to designing and deploying multi-agent AI systems in large U.S. enterprises, synthesizing the latest research from AI labs, vendor platforms and industry reports. It covers market drivers, technical architectures, ROI modeling and regulatory considerations, and concludes with actionable recommendations and frequently asked questions to help CIOs and CTOs lead their organizations into the age of agentic AI.

Introduction

The explosion of generative AI has transformed how businesses interact with data, automate operations and engage customers. Yet the limitations of single, monolithic LLMs have become apparent: they struggle to manage complex task orchestration, exhibit brittle reasoning when forced outside their training distribution and lack access to real-time enterprise data. This year has seen a decisive move toward multi-agent systems, in which multiple specialized models collaborate under an orchestration layer to perform sophisticated workflows. Instead of prompting one enormous model, users can delegate subtasks to dedicated agents that retrieve relevant information, plan sequences of actions, generate responses, evaluate output and call external services. The result is a network of cooperating agents that can handle higher-order reasoning, long-running workflows and integration across enterprise systems.

Major AI labs and vendors have accelerated this shift. Google’s research group introduced a “co‑scientist” that uses dedicated generation, reflection, ranking and evolution agents to propose novel hypotheses and iteratively refine them. Amazon launched AgentCore, a platform for building interconnected agents capable of analyzing data, writing code and performing tasks with eight-hour runtimes. OpenAI integrated a general-purpose agent into ChatGPT that can navigate calendars, generate slides and call external tools, drawing on both the Operator (remote browser) and Deep Research frameworks. These innovations signal a deeper trend: enterprise decision-making is shifting from deploying isolated AI models to designing ecosystems of intelligent agents that operate on context-rich data, abide by governance rules and deliver measurable business value. The following sections examine the forces driving this transformation, the architectures that enable it and the ROI enterprises can expect.

Market Context & Drivers

The enterprise appetite for agentic AI is expanding at an unprecedented rate. A June 2025 industry survey found that U.S. agentic AI revenue is projected to increase from $2.4 billion in 2025 to $46.5 billion by 2034—an astonishing compound annual growth rate of 39 percent. Globally, the market is expected to reach $7.6 billion by the end of 2025, up from $5.4 billion in 2024. Analysts attribute this surge to several converging trends:

- Exponential Adoption across Industries — According to multiple market studies, 85 percent of enterprises are expected to implement AI agents by the end of 2025. Adoption is particularly strong in healthcare—where 90 percent of hospitals plan to deploy agents for predictive analytics and clinical documentation—and in manufacturing, where 77 percent of firms already use AI for production, inventory management and customer service. Retailers are close behind, with 69 percent leveraging agents to deliver personalized shopping experiences and automated order tracking. Consulting giants like Deloitte and EY are using agentic platforms to reduce finance-team costs by 25 percent and boost productivity by 40 percent.

- High ROI and Productivity Gains — The Model Context Protocol (MCP), an open standard introduced in late 2024, reduces integration overhead by eliminating custom adapters between AI models and enterprise tools. A 2025 MCP adoption report found that organizations implementing MCP-first architectures experienced 30 percent reductions in development overhead and 50–75 percent time savings on common tasks. In one of the most comprehensive implementations to date, fintech company Block leveraged MCP to cut multi-day tasks down to hours and deploy thousands of employees on AI-driven workflows. Similarly, Verizon reported a nearly 40 percent increase in sales after deploying AI assistants to support 28,000 customer-service representatives. ServiceNow’s integration of AI agents reduced the time required to handle complex cases by 52 percent. A survey revealed that 62 percent of companies anticipate a full 100 percent or greater return on investment from their agent deployments.

- Technological Catalysts — Innovations from AI labs and cloud providers have lowered barriers to building multi-agent ecosystems. Google’s co‑scientist model demonstrates that specialized agents can outperform baseline models in generating novel scientific hypotheses. Amazon’s AgentCore supports any framework or model and includes a dashboard and marketplace for interoperable agents. OpenAI’s ChatGPT agent extends chat interactions with action-taking capabilities and connectors to Gmail, GitHub and terminal environments. Meanwhile, open standards like the Model Context Protocol and competing protocols such as Agent2Agent (A2A) enable agents from different providers to communicate, reducing vendor lock-in and fostering an “agentic web.” These developments democratize the creation of multi-agent systems and make them accessible to enterprises without specialized research teams.

- Regulatory and Risk Considerations — As AI becomes embedded in critical infrastructure, governance and compliance cannot be an afterthought. U.S. state laws are tightening around algorithmic accountability: Colorado now requires developers of high-risk AI systems to exercise reasonable care to prevent discrimination, and New Hampshire has criminalized malicious deepfakes. Tennessee’s ELVIS Act bars unauthorized voice simulations, while Maryland and California have enacted transparency rules for AI use. At the federal level, the transition from President Biden’s AI executive order to President Trump’s deregulation-focused order has created a patchwork of oversight, prompting enterprises to adopt internal governance frameworks and cross-border compliance strategies. Multi-agent architectures can facilitate compliance by isolating functions (e.g., one agent handles data retrieval while another performs sensitive processing) and logging agent interactions for auditability.

- Demand for Intelligent Orchestration — In an era of sprawling enterprise systems, CIOs need AI not only to generate content but to orchestrate business processes end-to-end. Multi-agent systems are well-suited for this because they can maintain context across long tasks, integrate with back-end systems via protocols like MCP, and execute actions such as updating customer records, triggering workflows or drafting code. As manufacturing and supply-chain leaders embrace Industry 4.0 and B2B e‑commerce becomes more complex, the ability of agents to coordinate across ERP, CRM, IoT and payment systems becomes a critical differentiator.

These drivers are converging to push multi-agent AI from experimental novelty to mainstream enterprise capability. Yet adoption requires careful architectural planning and an understanding of the underlying mechanics.

Technical Analysis & Architecture

To understand how multi-agent AI differs from monolithic LLM deployments, consider a traditional generative AI workflow: a user queries an LLM, which responds based on its internal knowledge and parameters. The model does not inherently plan multi-step actions, call external services or integrate with enterprise data unless augmented with plugins. In contrast, a multi-agent system distributes responsibilities across specialized components and coordinates them via an orchestrator.

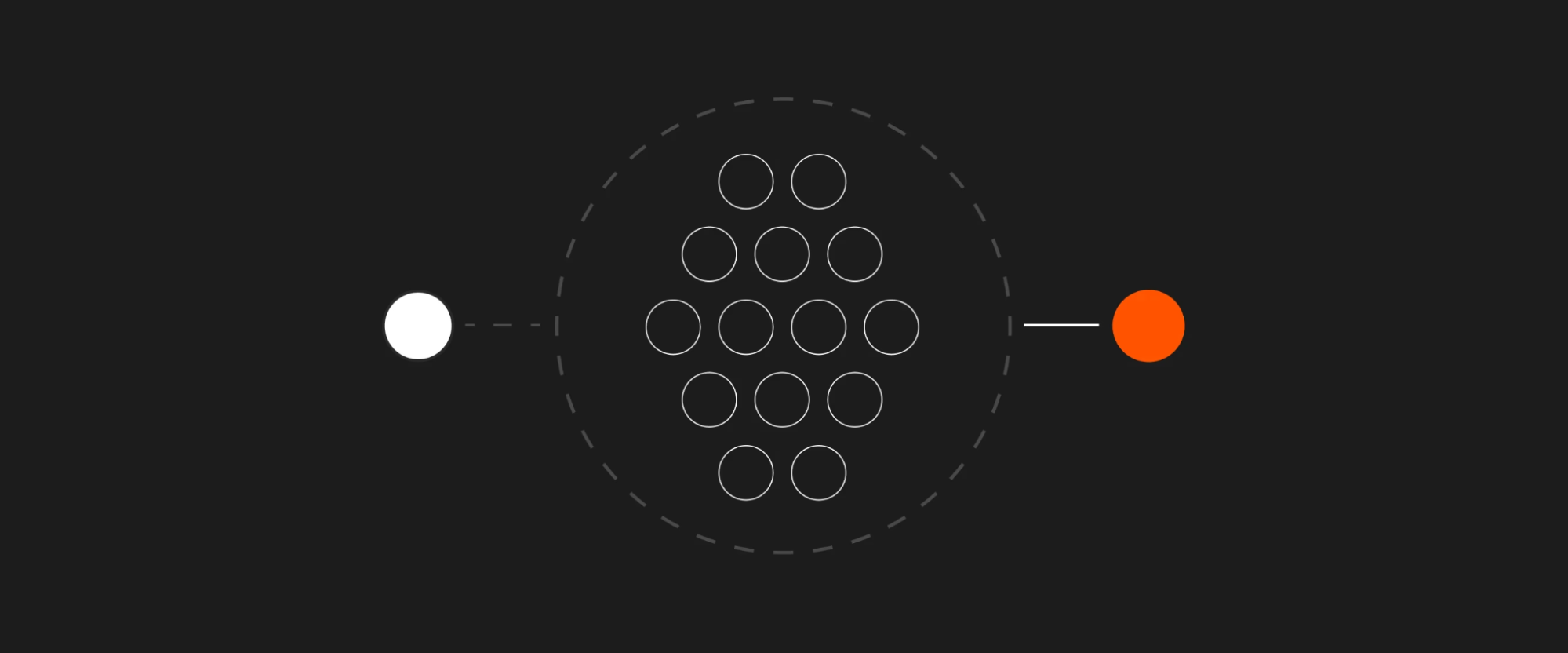

The figure below illustrates a representative design for a multi-agent system in an enterprise context.

Figure 1: Multi‑Agent System Architecture for Enterprise Intelligence. A central orchestrator manages specialized agents (planning, generation, retrieval and evaluation) and interfaces with structured and unstructured data sources, external APIs and enterprise services such as ERP/CRM, logistics and finance systems.

At the heart of the architecture is the orchestrator. This component manages the lifecycle of agents, decomposes user intents into subtasks, routes those tasks to the appropriate specialized agents, aggregates results and ensures that final outputs satisfy constraints such as compliance policies or budget limits. Orchestrators maintain contextual memory, manage asynchronous workflows and handle error recovery. Some orchestrators implement reinforcement learning or tree‑of‑thought methods to explore alternative action sequences and select the most promising one.

The architecture also includes the following components:

- Data Sources — Structured databases (ERP, CRM, data warehouses), unstructured repositories (documents, emails, web pages) and external APIs (weather services, payment gateways, analytics platforms). In a multi-agent system, the retrieval agent does not query these sources directly; instead, the orchestrator uses a protocol like MCP to discover and call connectors. Each connector exposes a uniform interface for a specific tool or data source, abstracting the complexity of integration.

- Planning Agent — Decomposes high-level objectives into a sequence of tasks, estimates resource requirements and calls other agents accordingly. It may use large language models trained on process descriptions or specialized planning algorithms.

- Retrieval Agent — Fetches relevant information from internal or external sources, indexes documents and ensures that the context passed to other agents is up to date. It may employ embedding-based semantic search or retrieval-augmented generation techniques.

- Generation Agent — Produces outputs such as code snippets, summaries, reports or creative content. It can be a fine-tuned LLM or a domain-specific model. For example, Amazon’s AgentCore allows developers to plug any model into an agent wrapper.

- Evaluation Agent — Critiques the outputs of other agents, checks for factual accuracy, bias or compliance violations, and recommends improvements. Google’s co‑scientist uses ranking, reflection and meta-review agents to evaluate hypotheses, and ChatGPT’s agent uses safety filters before executing code or ordering groceries.

These agents communicate via the orchestrator. When a request arrives (for instance, “compile a competitor analysis and schedule a debrief meeting”), the orchestrator consults the planning agent to break down the request into subgoals: collect market data, generate a comparative analysis, draft slides, schedule a meeting and send notifications. The retrieval agent fetches data from market intelligence APIs; the generation agent drafts the analysis and slides; the evaluation agent reviews them for tone and compliance; and finally, the orchestrator calls the calendar API to schedule the meeting and notifies stakeholders via email. Each step is logged, and feedback loops allow agents to refine their outputs.

Integration and Middleware: A critical challenge in enterprise settings is integrating multi-agent systems with existing applications. This is where emerging protocols like MCP and Agent2Agent come into play. MCP acts like a universal “USB‑C port” for AI applications. Instead of writing bespoke adapters for every combination of model and tool, developers implement a single interface per tool and per model. The orchestrator can then discover available connectors and invoke them through standard calls, drastically reducing integration overhead. Agent2Agent extends this concept to inter-agent communication, enabling agents from different vendors to exchange messages securely. By early 2025, the community had built more than 1,000 MCP connectors, and major vendors like Cisco, MongoDB, PayPal and AWS announced support. This interoperability allows enterprises to mix and match agents from different providers and avoid lock-in.

Security and Governance: Multi-agent systems introduce new attack surfaces. Orchestrators must authenticate and authorize agent actions, audit data flows and ensure agents do not exfiltrate sensitive information. Isolation of functions can help: for instance, a sensitive financial agent might run in a sandbox with restricted network access, while a less-sensitive planning agent runs in a shared environment. Agents should implement explainability features, logging the basis for their decisions, and follow enterprise access-control policies. Orchestrators should monitor for prompt injection and adversarial attacks, employing pattern filters and dynamic risk assessments.

Scaling Considerations: Multi-agent systems can be compute-intensive. The orchestrator must manage concurrency across agents, allocate resources and scale horizontally. Google’s co‑scientist uses test-time compute scaling, where the system dynamically allocates more compute to promising hypotheses and prunes less promising ones. Amazon’s AgentCore allows agents to run for up to eight hours, highlighting the need for long-running tasks and distributed state management. Enterprises must plan for GPU and CPU provisioning, caching of intermediate results and scheduling of asynchronous tasks. On the hardware side, new chips like Nvidia’s Blackwell Ultra and the Vera Rubin platform promise to deliver higher token throughput and lower energy consumption, enabling more complex agent workloads. Simultaneously, major cloud providers are investing tens of billions of dollars in AI‑optimized data centers and hydropower deals. CIOs should assess whether to run multi-agent systems on-premises, in private clouds or via managed services, considering latency, compliance and cost.

Comparison of Vendor Solutions: Several platforms illustrate diverse approaches to multi-agent systems. Google’s co‑scientist, built on Gemini 2.0, employs specialized agents—generation, reflection, ranking, evolution and proximity—to propose and evaluate scientific hypotheses. Although not yet commercial, it demonstrates the potential of modular agent architectures for innovation tasks. Amazon’s AgentCore, announced in July 2025, is a general-purpose platform that supports any framework or model and includes a dashboard and marketplace for agents. Agents can communicate using MCP and A2A protocols, and enterprises can deploy private agents and integrate with AWS services. OpenAI’s ChatGPT agent integrates remote browsing and deep research capabilities, allowing users to navigate calendars, write code and call third-party services through a familiar chat interface. While geared toward end users, it exemplifies how multi-agent capabilities can be packaged into consumer-facing tools. Finally, open-source models like Mistral’s Voxtral show how specialized models (e.g., multilingual speech recognition) can be wrapped as agents and integrated into larger workflows.

Data‑Driven Insights & ROI Modeling

Quantifying the business value of multi-agent AI is essential for executive decision-making. The metrics emerging from early adopters provide a compelling case. Figure 2 visualizes adoption rates across sectors. Overall enterprise adoption is projected at 85 percent, healthcare at 90 percent, manufacturing at 77 percent and retail at 69 percent. These numbers underscore the ubiquity of agentic AI across industries.

Figure 2: Estimated Adoption of AI Agents by 2025 across enterprise (general), healthcare, retail and manufacturing sectors.

ROI from multi-agent systems derives from multiple sources:

- Reduced Development Overhead — Traditional AI projects often require custom integrations between models and tools, incurring a “M×N” integration tax. MCP and similar protocols reduce this overhead by standardizing interfaces, resulting in an average 30 percent reduction in development costs. This includes savings on engineering labor, infrastructure maintenance and integration testing.

- Time Savings on Tasks — Automating complex workflows with multiple agents yields substantial time savings. The MCP enterprise adoption report found that common tasks saw 50–75 percent reductions in completion time. For example, Block’s internal deployment reduced multi-day tasks to hours. In call centers, Verizon reported a nearly 40 percent sales uplift because AI agents allowed human representatives to focus more on selling rather than routine call-handling. ServiceNow’s case management productivity improved by 52 percent.

- Productivity Gains — In marketing experiments, AI agents working alongside humans increased productivity per worker by 60 percent. Deloitte and EY reported 25 percent cost reductions and 40 percent productivity increases in finance tasks. These gains stem from AI’s ability to handle repetitive tasks and provide real-time recommendations.

- Revenue Enhancement — Sales organizations adopting agentic AI report average revenue increases of 6–10 percent. AI-driven personalization in retail leads to significant revenue growth, with 69 percent of retailers using AI agents reporting revenue gains. Financial institutions expect a 38 percent increase in profitability by 2035 due to AI agents.

Risk Mitigation and Compliance — Multi-agent systems can include specialized compliance agents that check outputs against regulations and company policies, reducing the likelihood of costly violations. They also generate audit trails that simplify internal and external audits.

To capture these benefits, enterprises should build ROI models that balance:

- Upfront Costs: Licensing or cloud usage fees, integration work, training of staff and potential infrastructure upgrades. New hardware like Nvidia’s Blackwell Ultra GPUs may be necessary for high-performance workloads.

- Operational Savings: Reductions in manual labor, faster turnaround times, improved asset utilization and energy savings (especially if new, more energy-efficient chips are adopted).

- Revenue Gains: New revenue streams enabled by AI-driven products or services, improved conversion rates and increased throughput.

- Intangible Benefits: Improved employee satisfaction, better customer experiences, enhanced innovation capacity and strategic differentiation.

Figure 3 illustrates a sample ROI breakdown derived from the MCP adoption report and related statistics.

Figure 3: ROI Metrics from MCP Adoption. Organizations adopting MCP and multi-agent architectures report around 30 percent reductions in development overhead and 50–75 percent time savings on common tasks. These metrics provide a baseline for ROI calculations, though exact values vary by industry and implementation.

Actionable Recommendations

To translate multi-agent AI from concept to production, CIOs, CTOs and engineering leaders should consider the following recommendations:

- Establish a Multi‑Agent Strategy and Governance Model: Create an internal “AI Orchestration Council” comprising technology leaders, domain experts, compliance officers and cybersecurity specialists. Define a roadmap that aligns agentic AI initiatives with strategic objectives and sets guardrails for data usage, model evaluation and human oversight.

- Adopt Open Protocols and Standardized Connectors: Embrace MCP or similar protocols to avoid bespoke integrations. Inventory existing enterprise tools and data sources, and prioritize building or adopting connectors for critical systems. Participate in open-source communities to influence standards and reduce vendor lock‑in.

- Invest in Orchestration and Tooling: Select or build an orchestrator that can manage agent lifecycles, maintain context across long-running tasks, enforce authentication and handle failure recovery. Evaluate platforms like AgentCore for horizontal scalability, or build an in-house orchestrator using frameworks such as LangChain, Flowise or SuperAGI. Ensure the orchestrator supports concurrency and can integrate with internal scheduling systems.

- Start with High-Impact, Low-Risk Use Cases: Identify processes where errors are tolerable and benefits are clear—such as marketing campaign generation, internal report drafting, knowledge base search or code refactoring. Launch pilot projects with well-defined success metrics and gradually expand into more critical areas after proving reliability.

- Design Modular, Reusable Agents: Encapsulate specific capabilities in agents that can be reused across domains. For example, a “document summarization” agent can serve legal, finance and HR teams. Use containerization to deploy agents in isolated environments and implement versioning to track changes.

- Implement Continuous Learning and Evaluation: Integrate mechanisms for agents to learn from feedback. Use ranking or reflection agents to critique outputs and incorporate reinforcement learning or supervised fine-tuning where appropriate. Monitor performance metrics and bias, and implement automated regression tests.

- Integrate Security and Compliance from Day One: Apply principle-of-least-privilege access controls to agents. Encrypt data in transit and at rest. Audit all agent interactions and maintain logs for regulatory review. Utilize evaluation agents to detect toxic or noncompliant outputs and block them before execution.

- Plan for Scalability and Hardware Requirements: Assess compute needs for training and inference. Explore GPU-optimized cloud options or consider on-premises clusters for data-sensitive workloads. Evaluate emerging hardware like Blackwell Ultra for improved performance-per-watt and consider partnerships with cloud providers investing heavily in AI infrastructure.

- Cultivate a Culture of Collaboration: Agentic AI changes workflows and job roles. Provide training programs to upskill employees, encourage cross-functional collaboration and foster a “human-in-the-loop” mindset where AI augments rather than replaces people. Transparent communication helps mitigate resistance and ensures adoption success.

Conclusion

Multi-agent AI systems are poised to redefine enterprise intelligence. By orchestrating specialized agents through open protocols and robust orchestrators, organizations can transcend the limitations of single LLMs and automate complex workflows at scale. Early adopters across healthcare, manufacturing, retail and finance are already reporting dramatic productivity gains, cost reductions and revenue growth. However, realizing these benefits requires thoughtful architectural design, rigorous governance and a commitment to continuous improvement.

As the market for agentic AI accelerates, with revenues projected to skyrocket over the next decade, CIOs and CTOs must act now to build their intelligence ecosystems. MLVeda’s consulting expertise in enterprise AI, middleware orchestration and workflow automation can help organizations plan, implement and scale multi-agent systems tailored to their operational realities. By embracing modular architectures, adopting open standards and fostering a culture of collaboration, enterprises can unlock the next frontier of productivity and innovation.

Frequently Asked Questions

1. What distinguishes multi-agent AI from traditional AI models?

Traditional AI deployments often rely on a single large model to perform tasks. Multi-agent systems distribute responsibilities across multiple specialized agents orchestrated by a controller. This architecture allows agents to plan, retrieve data, generate content and evaluate outputs cooperatively, enabling more complex and context-aware workflows than a single model can handle.

2. What frameworks or platforms are available for building multi-agent systems?

Developers can choose from open-source frameworks like LangChain, LlamaIndex and SuperAGI, which provide abstractions for orchestrators and agent interactions. Cloud providers offer managed platforms: Amazon’s AgentCore supports any model and includes a marketplace; Google’s experimental co‑scientist demonstrates research capabilities; OpenAI’s ChatGPT agent integrates remote browsing and code execution. Standardization efforts like MCP and A2A enable interoperability across these platforms.

3. How do multi-agent systems integrate with existing ERP/CRM tools?

Protocols like MCP define a uniform interface for connectors. Each enterprise tool (e.g., Salesforce, SAP, Workday) is exposed via an MCP server that handles authentication and API calls. The orchestrator discovers these connectors and calls them on behalf of agents. This eliminates bespoke adapters and ensures that agents can read or update records without direct access to underlying systems.

4. What are the key risks and how can we mitigate them?

Risks include data leakage, compliance violations, hallucinated outputs, prompt injection and system failure. Mitigation involves enforcing role-based access control, using secure enclaves for sensitive agents, implementing evaluation agents to filter harmful outputs, logging all agent actions for auditability, and conducting rigorous testing. Compliance agents can cross-check outputs against regulations, and continuous monitoring detects anomalies.

5. How should enterprises measure the ROI of multi-agent AI?

ROI should consider development cost reductions (e.g., 30 percent via standardized connectors), time savings (50–75 percent on common tasks), productivity gains (up to 60 percent in marketing), revenue uplift (6–10 percent) and intangible benefits such as employee satisfaction and improved compliance. Enterprises should also account for initial investments (licensing, infrastructure, training) and adjust models as real-world data becomes available.

6. Where should we start if we are new to agentic AI?

Begin with a strategic assessment: identify pain points that could benefit from better coordination (e.g., document generation or support triage). Experiment with open-source frameworks to build a simple orchestrator and a few agents. Use standardized protocols to integrate with existing tools, and run pilots with clear success criteria. Engage partners like MLVeda to design robust architectures, manage compliance and scale deployments.